New platform overcomes time and space constraints

A walk from the famous Place de la Concorde to the beautiful Champs-Élysées is one of the top things to do in Paris. In a contact-free era, is it possible to feel the romantic atmosphere in Paris without being there in person? This may soon be realized thanks to domestic technology. Users can travel to the romantic city of Paris in virtual reality; however, making virtual worlds realistic and believable involves significant time and money. Against this backdrop, the team, led by Professor Woontack Woo, developed a platform that utilizes spatial information for anyone to easily create and experience three-dimensional worlds.

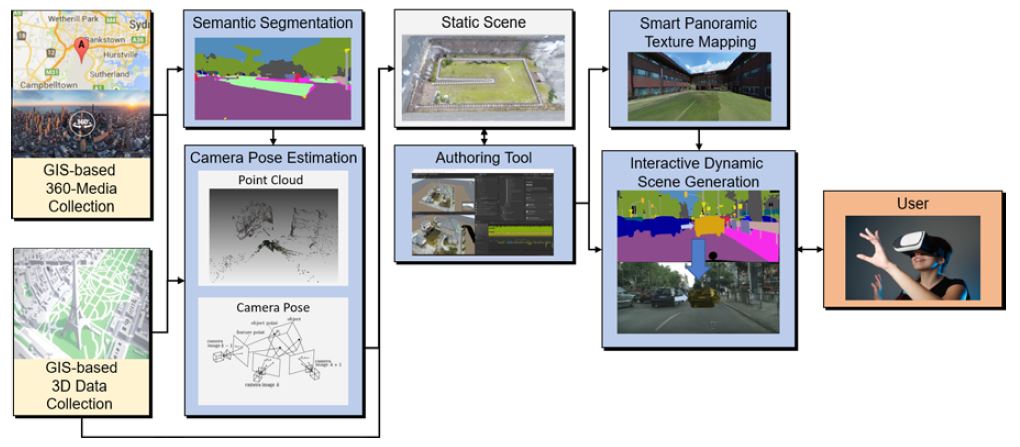

Algorithm and authoring tools for easier VR content production

The team, led by Professor Woontack Woo, developed an algorithm that estimates camera pose based on 360-degree panoramic images and spatial information provided by GIS services. By combining the coordinates of 360-degree images in Google and camera pose estimation, image textures can be

mapped onto virtual worlds. The team also developed a scene rendering technique using Smart Panoramic Texture Mapping (SPTM), which stitches two-dimensional images to threedimensional images, enabling scenes to be generated while walking. Interactive dynamic object generation was achieved

using GAN, a deep learning technique. Three-dimensional models were provided for moving objects, such as watches and vehicles, so that they can be moved into three-dimensional worlds. This is a key technique that supports user interactions in the content production environment.

Authoring tools (interaction, navigation) were also developed. The tools support object detection and location tracking for users to interact with objects in the S3D 360VR environment, and integrate property editing and related information to conveniently produce VR content with industrial applications.

“Providing new experiences in augmented reality for the contact-free era”

Professor Woontack Woo said, “The future will be a digital twin era, with users sharing their emotions and social relations in virtual reality. Individuals will be users, and at the same time, content producers.”

Through further research, the team plans to provide a VR content production platform that allows users to freely interact in virtual space without time and space constraints, using three-dimensional spatial data. The study was conducted under the Information and Communications/Broadcasting R&D Program of the Institute for Information & Communications Technology Planning & Evaluation (IITP) under the Ministry of Science and ICT. The team published a related paper in an international journal, and applied for one PCT patent.

Prof. Woontack Woo

2020 KI Annual Report